TiDB on AWS EKS ~PoC report of DMM video~

Introduction

This is the 12th day of the DMM Group Advent Calendar 2021. Shigo Mori, Yoshihiko Goto, and Jun Takagi from the DMM.com Video Distribution Division will be in charge.

In the DMM video, various improvements such as making the API layer microservices and front layer Web components are progressing, but the database layer is still under consideration for improvement methods. This time, I will share the PoC of DB improvement in TiDB.

DMM video database issues

Operation costs

This is the database structure of our division.

Due to functional division, there are multiple clusters with such a configuration. Since it is operated on-premises, there are parts that are difficult to operate, such as resource procurement and network configuration management. We considered using NewSQL because we expected to reduce operating costs and consolidate the environment.

Scalability and Consistency

If more users come than initially expected during live distribution or campaigns, the number of connections to the DB and the amount of writing will increase. Symptoms such as CPU load spikes and replication delays may occur on the DB instance. In particular, if there is a delay in replication around purchases, it is not desirable because users may receive inquiries such as "I made a purchase, but it is not reflected on the purchased screen."

Selecting DB technology in the NewSQL era

There are various options for selecting DB technology. As 2021 is said to be the NewSQL era, I was careful not to adopt outdated technology. Another limitation is that the selection of DB technology this time is an improvement of the existing system (PHP + MySQL), so technologies other than RDB were excluded from the options.

Don't Adopt Inappropriate Technology

What are NewSQL products?

Wikipedia explains the definition of NewSQL in terms of three elements.

- Relational database

- ACID guarantees of conventional relational database systems

- Scalability (like NoSQL systems)

| ー | MySQL Single Host | MySQL Replication Cluster | MongoDB | NewSQL< /th> |

|---|---|---|---|---|

| Relational database (SQL) | ✅ | ✅ | ❌< /td> | ✅ |

| ACID Guaranteed | ✅ | ❌ (eventual consistency) | ❌ (near eventual consistency) | ✅ |

| scalability | ❌ | ❌( read only) | ✅ | ✅ |

What kind of technology is NewSQL? came out in various ways.

I will summarize the results of my research.

Is NewSQL technology really reliable?

When adopting new technology, there was a concern that it was "too new" and risky. First, I read the "What's Really New with NewSQL?" paper on the NewSQL technology itself. Then, there was a description that could be taken as "NewSQL's technical maturity is not low".

Also, Google Cloud Spanner and TiDB are already in use by many companies, so I've found that NewSQL technology is not "too new".

Is NewSQL a silver bullet?

One of the things NewSQL products do better than NoSQL products is their support for SQL and ACID, so you might be wondering if they are all perfect and good. However, a perfect design does not exist in the world, and "design" is always traded off. In other words, it is inevitable that if you choose "this", "that" will not be chosen.

This point also applies to the NewSQL product, where we traded off the choice between "scalability" and "latency". If you select "Scalability", communication between servers will occur because it is configured as a distributed system instead of a single server, and "latency" will be high. Instead, the "scalability" property allows the "throughput" to grow infinitely.

So NewSQL is not a silver bullet. Taking TiDB as an example, it seems better not to use TiDB unless transaction latency of 1ms or more is acceptable.

*Don't get me wrong: Not all queries will have higher latency when going from traditional DB to TiDB. In the case of a single record result query or write transaction, the communication latency increases as the single server becomes a distributed system. However, queries for multi-row results may be processed in parallel on multiple servers, so latency may be lower than traditional DBs. In addition, for data analysis query scenarios, proper use of TiDB's column store (TiFlash) is likely to significantly improve queries.

How to choose a NewSQL product

I checked the following NewSQL products.

| Feature | Amazon Aurora | Google Cloud Spanner | YugaByteDB | CockroachDB | TiDB |

|---|---|---|---|---|---|

| Elastic scalability (Both read and write) | ❌ | ✅ | ✅ | ✅ | ✅ |

| Automated failover and high availability | ✅ | ✅ | ✅ | ✅ | ✅ |

| Distributed ACID transactions | ✅ | ✅ | ✅ | ✅ | ✅ | SQL compatibility and protocol | MySQL and PostgreSQL | Proprietary | PostgreSQL | PostgreSQL | MySQL |

| Open Source License | ❌ | ❌ | Apache 2.0 | BSL and CCL | Apache 2.0 |

| Open Source Contributor Count | ❌ | ❌ | 100+ | 300+ | 500+ |

| HTAP | ❌ | ❌ | ❌ | ❌ | ✅ |

※This The table is from the PingCAP Co., Ltd. homepage.

Amazon Aurora

According to the Amazon Aurora paper, Aurora's storage layer is a distributed data system, but the computing node capacity is similar to that of a conventional MySQL server, and the pool buffer is limited to the node's memory like a single server. I'm here. Especially since the only node in charge of writing is the Master node, I don't think it has the scalability of "Both read and write" as shown in the table above.

Google Cloud Spanner

Google Cloud Spanner is a NewSQL product developed by Google, and it has good scalability and production experience. However, MySQL protocol is not supported. This PoC is omitted because it is a trial to improve the existing system (PHP + MySQL). I think it's a powerful option for new service development.

YugaByteDB & CockroachDB

YugaByteDB and CockroachDB are images of NewSQL products similar to Google Cloud Spanner. Scalability is no problem. However, it only supports the PostgreSQL protocol, so I didn't choose it for this PoC for the same reason as above.

TiDB

TiDB is a distributed database product that goes beyond the concept of NewSQL. Common features of NewSQL products are 1) horizontal scalability, 2) strong consistency (ACID guarantees), 3) high availability, and 4) relational database. TiDB also features HTAP (Hybrid Transactional and Analytical Processing) and can be used for real-time analysis (big data). If you want to know more, I recommend Koba's article here.

In response to this PoC issue in our business division, when we summarized the "personal image" of the DB product we wanted to verify as follows, TiDB fit all of them.

TiDB PoC Goals

Our system is a large monolithic system with a lot of source code and multiple databases. The current situation is that neither the function nor the DB can be separated. Since it is practically difficult to switch the entire DB to TiDB, the team decided to discuss and set a goal and deadline for the PoC of TiDB.

What you can do to build an environment

There are several ways to build TiDB, but this time we will build and verify with AWS EKS. According to the TiDB documentation, the hardware requirements for the production environment seem to be strict, so I'll try to see if there are any problems with building on AWS.

Verification target function is only purchase function

It does not verify all the functions of the existing system, but only the "purchase" part. There are only 3 pages related to the purchase flow (Basket, Purchase, and Purchased page) and we validate all SQL compatibility and performance for these pages.

Scalability and write performance

Since the experience value of purchased TPS in the current production environment is about 50, I wonder if TiDB can be scaled to 5 times, i.e. TPS is 250 to verify if it can be raised. Also, in order to verify TiDB's scale-out/scale-in operation, we first constructed it with the minimum number of servers, and then verified whether it would be possible to easily increase the number of servers using the scale-out operation method and increase the TPS. To do.

Issues of concern

We will investigate the following issues when introducing TiDB:

The content of the TiDB PoC is as follows for the above goal definition. rice field:

Build TiDB with AWS EKS

First, build the TiDB environment. Since our division builds the development environment on AWS, TiDB is also built on the AWS environment. There are two main ways to build TiDB. ① Deploy to vm with TiUP ② Deploy to Kubenetes with tidb-operator. With AWS EKS, management of VMs is offloaded to the EKS node pool. Also, since I want to touch CloudNative technology, I chose to build PoC this time using AWS EKS + tidb-operator.

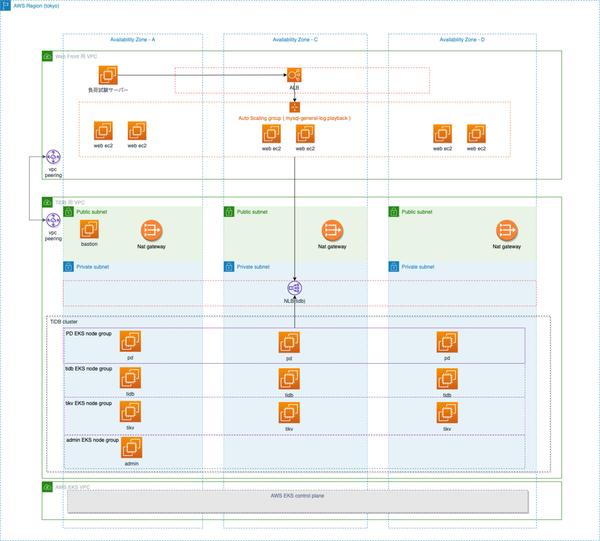

Here's a schematic:

For fault tolerance, we use Multi-AZ in Tokyo region of AWS.

For the security of the DB, the VPC on the user side (application side) and the VPC on the DB are completely separated, and the application connects to the internal VLB on the DB VPC side in the form of VPC peering. Also, put most of the servers in the DB VPC (other than the Internet gateway) in the Private subnet.

Inside a TiDB cluster there are several components:

Due to the different characteristics of each component, the resource requirements are also different. Divide by AWS EKS node-group to give optimal resources.

Infrastructure as Code (IaC) was considered, so it was realized as follows.

Configuring a VPC/subnet in Terraform

Originally, eksctl creates a VPC and a subnet at once, so it's easy, but the IP Range of our AWS VPC is unified and managed, and the VPC itself is distributed, so You have to specify vpc id and subnet id in eksctl yaml. In such a case, you will not be able to automatically create the subnets required for EKS, so you will have to finely configure subnets/routes/nats in Terraform. It takes a little effort.

Build AWS EKS cluster with eksctl

TiDB has several components, each a Kubernetes Pod in EKS. Each component has different resource requirements, and we wanted to place them in EKS Node Groups with different specifications, so we planned the EKS Node Groups as follows.

| EKS Node Group | TiDB Components | Spec Requirements |

|---|---|---|

admin | Monitoring (grafana , prometheus) | Normal |

pd | Scheduling (pd-server) | SSD disk |

tidb | Compute (tidb-server) | Memory, CPU, Network |

tidb | Storage (tikv-server) | Memory, CPU, Network, SSD Disk |

How each component (K8S Pod) was placed in the correct EKS Node Group, but I decided to use Kubernetes' "Taint and Toleration". Put a trits in each Node Group, then in the tidb cluster definition specify the trits of his Node Group where each Pod's toleration is placed.

Originally I tried to realize IaC only with Terraform, but at that time terraform-provider-aws did not support trints. For that, I chose the official eksctl to build AWS EKS.

* As of December 2021, terraform-provider-aws seems to be able to support trints.

The eksctl configuration is defined in yaml and is very simple:

---apiVersion: eksctl.io/v1alpha5kind: ClusterConfigmetadata:name: tidb-clusterregion: ap-northeast-1vpc: # Not required if not using an existing VPC id: "vpc-hoge" subnets:private: # a /c/d 3 AZ...public: # a/c/d 3 AZ...nodeGroups:- name: admin # omitted- name: pd # omitted- name: tidb # omitted- name: tikv instanceType: c5.4xlarge desiredCapacity: 3 labels:dedicated: tikv taints:dedicated: tikv:NoSchedule # Notation for placing tikv pods in tikv node group ssh:allow: truepublicKeyPath: ./config/id\_rsa.pub privateNetworking: true

Solution for insufficient IP address The IP Range of our verification VPC was /24. The default setting of AWS EKS CNI consumes a lot of IP addresses, so it is easy to run out of IP addresses. However, the problem can be solved by changing the following settings.

# Reference URL: https://docs.aws.amazon.com/eks/latest/userguide/cni-env-vars.htmlkubectl set env ds aws-node -n kube-system AWS_VPC_K8S_CNI_CUSTOM_NETWORK_CFG=truekubectl set env ds aws -node -n kube-system WARM_IP_TARGET=3# Confirm kubectl describe daemonset aws-node -n kube-system | grep -A5 Environment

Build a tidb cluster with TiDB-operator

It's a simple procedure.

Confirmation procedure

The output of kubectl get svc is the entry point for service TiDB called ${ClusterName}-tidb. EXTERNAL-IP is the AWS VLB domain and TiDB entry point for your application.

You can also use your existing mysql cli to directly connect to TiDB and check (requires port-forward).

kubectl port-forward -n ${Namespace} svc/${ClusterName}-tidb 4000 > /tmp/pf4000.out &mysql -h 127.0.0.1 -P 4000 -u rootmysql> select tidb_version()\ GGrafana

The service clustername-grafana in the output of kubectl get svc is Grafana. You can access it with a browser (user name and password can be set in tidb-monitor.yaml). With dashboards useful for monitoring already in place, it is useful for daily monitoring and investigations when problems occur.

TiDB Dashboard

There is also a TiDB Dashboard on the TiDB PD server. You can access the monitoring screen with a browser by using kubectl port-forward.

kubectl port-forward svc/${ClusterName}-discovery -n ${Namespace} 10262:10262 &>/tmp/portforward-pd.log &Browser localhost:10262 can access. There are many features, but the features that were useful for PoC are mainly "SQL Statements" and "Slow Queries".

Confirming AWS EKS Performance

According to the TiDB documentation, the hardware requirements for the production environment seemed to be demanding.

The main reason is that TiDB's storage engine is RocksDB, which is implemented with an LSM-tree data structure. LSM-tree is characterized by the fact that "random write" becomes "sequential write" and can be speeded up, but instead of this, the amount of reading and writing seems to increase. Causes include:

TiDB uses Raft-based Multi-Region technology on RocksDB to set the number of replicas for redundancy, and the default is 3. If you want to increase redundancy, you can increase the number of replicas. In terms of capacity, the data compression architecture is adopted, so the capacity efficiency may be higher than that of a normal DB. Since it has three copies by default, it uses more capacity than a single DB, but in the case of a design that considers replication, etc., it is possible to use a distribution mechanism instead of a full clone, which is a product such as MySQL. Depending on the type, very high capacity efficiency can also be expected. In addition, although sufficient network bandwidth is required for network bandwidth, a technology called hybernete region reduces the communication of data replicas that are less accessed. For data persistence, unlike other DBs, an intermediate data write called RaftLog occurs before the actual data is written, so I/O consideration in that respect is necessary.

According to the official site, 10 Gbps bandwidth is required for the network, especially around I/O, and PCI-E SSD is recommended for the TiKV server disk.

In line with the above requirements, we chose the following servers of the aws c5 series.

| Server type | instanceType | Number | vCPU | Memory GB | network bandwidth |

|---|---|---|---|---|---|

| pd | c5.xlarge | 3 | 4 | 8 | 10GBps |

| tikv | c5.4xlarge | 3 | 16 | 32 | 10GBps |

| tidb | c5.4xlarge | 2 | 16 | 32 | 10GBps |

| monitor | c5.2xlarge | 1 | 8 | 16 | 10GBps |

Here is what we decided upon:

Instance EBS I/O performance and network bandwidth

Because it runs inside a Kubenetes Pod, rather than directly inside an instance, AWS EBS becomes a Kubenetes Persistent Volume (PV) and network traffic becomes a Kubenetes CNI. I checked to see if there was any performance degradation. There was no problem in the performance test with AWS EKS for these two points.

iperf3Now I can check the performance of AWS EKS itself.

Purchase simulation implementation

Our system consists of a web server (Apache, PHP) + MySQL. This time, mainly because we want to verify his MySQL compatibility and scalability of TiDB, we will test the web server (Apache + PHP) with the same version as the current service. Since the verification target is only "the function for users to purchase products", I would like to test only this part of the code, but the overall implementation was monolithic and the purchase part code was complicated and could not be extracted cleanly.

We have developed the following test methods based on existing purchasing processes:

This saves me time by simply implementing MySQL general log playback instead of messing with existing complex code. From the point of view of the purpose of DB verification, there was no problem.

The parameterized SQL General log looks like the image below:

210527 10:58:22 48 Connectslave 48 Init DBslave_db 48 Query SELECT * from hoge WHERE `user_id` = '__USER_ID__' 49 Connectmaster 49 Init DBmaster_db 49 Query INSERT INTO fuga (user_id)VALUES ('__USER_ID__') 49 Query SELECT LAST_INSERT_ID() 49 PHPExec setParam('FUGA_ID', getLastResultOneValue()) 49 Query SELECT * fuga where id = '__FUGA_ID__'Originally, the purchase process is divided into several HTTP communications, but this method can be combined into a single URL, and Apache Bench can be easily used for performance testing.

Performance Testing and Scaling Out

We prepared for performance testing by importing hundreds of millions of production-scale records into the test environment. The performance test is divided into two types of “purchase load” and “noise load” and applied in parallel. The reason is to make the DB Read:Write ratio close to the actual one in order to correctly measure the "purchased TPS".

Scale out

First tidb-server has 2 and tikv-server has 3 TPS threshold is 110. This number has exceeded the experience of production MySQL, but we want to achieve about twice as much, so we will scale out. The procedure is very simple.

First, scale out the EKS Nodegroup.

# number of tidb nodes : 2 -> 7eksctl scale nodegroup --cluster=dig-ec-tidb-cluster --nodes=7 --nodes-min=7 --nodes-max=7 tidb# number of tidb nodes : 3 -> 5eksctl scale nodegroup --cluster=dig-ec-tidb-cluster --nodes=5 --nodes-min=5 --nodes-max=5 tikv

and tidb- Edit cluster.yaml to reflect.

tikv:baseImage: pingcap/tikv- replicas: 3+ replicas: 5 tidb:baseImage: pingcap/tidb- replicas: 2+ replicas: 7

apply

kubectl apply -f config/tidb/tidb-cluster.yaml -n tidb-cluster

Now tidb-server and tikv-server pod is increased. tidb-server is a stateless server that will be put into service as soon as it is spun. tikv-server is a server with Persistent Volumes (PVs), so data is gradually migrated to this server when it is added to the cluster. The PD server is responsible for this scheduling. In Grafana's PD dashboard, you can see the leader and region being moved as shown in the graph below.

* Region is a data segment. The same data segment has 3 or more replicas and becomes a Raft-Group. Leader is the node responsible for Write/Read of Raft-Group, other nodes are followers. The PD server distributes the amount of Regions and Leaders to each tikv-server in a well-balanced manner.

Scaling out tidb-server to 7 and tikv-server to 5 You have reached your target of 250 purchased TPS. Here are the points for scaling out:

This concludes the performance test.

Scale in

After the performance test was over, we scaled in and salvaged the server. The scale-in operation is a little more complicated. There are two reasons:

Let's see an example of scaling in a single tikv-server. First select the tikv pod you want to stop and repeat the following steps for each pod

# Check tikv pod name you want to stop with kubectl # Check tikv store id from tikv pod name in tidb cluster json ID=$(kubectl get tc ${CLUSTER_NAME} -n ${Namespace} -ojson jq -r ".status.tikv.stores | .[] | select ( .podName == \"${POD_NAME}\" ) | .id")# offline tikv node with pd-ctl kubectl port-forward -n ${Namespace} svs/${CLUSTER_NAME}-pd 2379:2379pd-ctl store delete ${ID} # Rest assured. The deletion here is not to delete the data at once, it is a command to safely delete the data after migrating it to another node # Wait until the store state (state_name) becomes `Tombstone` watch pd-ctl store ${ID}# Unbind TiKV and PV# Check Pod's PesistentVolumeClaim PVC_NAME=$(kubectl get -n ${Namespace} pod ${POD_NAME} -ojson | jq -r '.spec.volumes | .[] | select (.name == "tikv") | .persistentVolumeClaim.claimName')echo $PVC_NAME# Delete PesistentVolumeClaim: kubectl delete -n ${Namespace} pvc ${PVC_NAME} --wait=false# Delete the TiKV pod itself kubectl delete -n ${Namespace} pod ${POD_NAME}The above steps complete the TiDB Cluster offline work. Then reclaim the AWS instance with the following steps:

Resolving Concerned Issues

Auto increment id Issues

As a usage of TiDB, auto increment id is not recommended. Poor use of auto increment ids in TiDB can lead to two kinds of problems.

If the use of auto increment id is unavoidable due to business logic, as of December 2021 TiDB v5.0+ has a simpler solution. Use the NONCLUSTERED table.

CREATE TABLE t( id int PRIMARY KEY NONCLUSTERED AUTO_INCREMENT, /* NONCLUSTERED : data segment of table is not related to this id column */ c int)AUTO_ID_CACHE=100/* reduce numbering cache */ SHARD_ROW_ID_BITS = 4 /* Put 4 random bits in inner rowid to avoid HOTSPOT problem */PRE_SPLIT_REGIONS=3 /* Pre-split table data segment into 8 (2^3) */;

We were using TiDB v4.x at the time, so we couldn't use the simple method above. I did my best and was able to achieve the same effect as the above method, but since it is already old knowledge, I will omit it. For those who are interested db tech showcase 2021 >

Data migration challenges

Various tools are provided in echosystem of TiDB. Data migration tools alone include TiDB Lightning, TiDB Data Migration and Dumpling. Conversely, there are two data synchronization tools from TiDB to MySQL: TiDB Binlog and TiCDC. Since each tool has its own tools, it is easy to get lost. Also, it is important to decide whether to switch all at once or to switch after fully verifying SQL compatibility with the dual-write method.

Here is how to use different data migration tools for echosystem:

Our This is a transition plan that we considered according to the situation of the business division:

Compatibility issues with MySQL

TiDB supports the MySQL protocol. If you just change the PHP + MySQL source code from the MySQL endpoint to the TiDB endpoint, it should work just fine. However, TiDB is not 100% MySQL compatible. According to the official site, there is a summary document of incompatible parts, but it is still not 100%, so it is necessary to test the function thoroughly.

In this test, there are about 140 SQL statements related to the purchase function, of which only 8 require modification. I've grouped it into 3 types:

2 SQLs about NO_ZERO_DATE/NO_ZERO_IN_DATE sqlmode

Syntax like DEFAULT '0000-00-00 00:00:00' for default value used when create table is deprecated as of MySQL 5.6.17. TiDB is also deprecated for MySQL 5.7 compatibility.

3 SQLs with ONLY_FULL_GROUP_BY sqlmode

Similar situation to the above, but the current system's MySQL @sql_mode does not contain ONLY_FULL_GROUP_BY, which is the default sqlmode used by TiDB.

3 SQLs about SLOW SQL

When the performance test was not going well, I noticed Slow SQL by looking at the "SQL Statements" and "Slow Queries" screens on the TiDB Dashboard. All three Slow SQL patterns were the same. A simple summary is as follows.

Table structure:

CREATE TABLE `orders` (`id` int(11) NOT NULL PRIMARY KEY NONCLUSTERED AUTO_INCREMENT,`user_id`varchar(20) NOT NULL,KEY `idx_user_id`(`user_id`)) AUTO_ID_CACHE=100 SHARD_ROW_ID_BITS=4 PRE_SPLIT_REGIONS =3 ;CREATE TABLE `order_items` (`id` bigint(20) NOT NULL PRIMARY KEY NONCLUSTERED AUTO_INCREMENT,`price` int(11) NOT NULL,`order_id` int(11) NOT NULL,`state` enum('active ', 'disabled') NOT NULL,KEY `idx_order_id`(`order_id`)) AUTO_ID_CACHE=100 SHARD_ROW_ID_BITS=4 PRE_SPLIT_REGIONS=3 ;SELECT statements that take several minutes:

SELECT i.id, i.order_id, i.price FROM orders AS o, order_items AS iWHERE o.id = i.order_id and i.state = 'active' and o.user_id = '?' ORDER BY i. id DESC;

The purpose of the SQL is to filter by user_id in the parent table and give the child tables that belong to the parent table. However, the child table data is filtered by the condition of state = 'active'. The execution plan will be:

explain SELECT i.id, i.order_id, i.price FROM orders AS o, order_items AS iWHERE o.id = i.order_id and i.state = 'active' and o.user_id = '?' ORDER BY i .id DESC;+--------------------------------------+------ ----+-----------+---------------------- ----+--------------------------------------------- ---------------------------+| id| estRows| task| access object| operator info |+-------- ------------------------------+----------+-------- ---+-------------------------------------+-------- -------------------------------------------------- --------------+| Sort_8 | 12.50 | root| | test.order_items.id:desc|| └─Projection_10 | 12.50 | root| | .order_id, test.order_items.price ||└─HashJoin_40 | 12.50 | root| | inner join, equal:[eq(test.orders.id, test.order_items.order_id)]||├─TableReader_60(Build) | 10.00 | root| | data:Selection_59||| └─Selection_59| 10.00 | cop[tikv] | | eq(test.order_items.state, "active")||│└─TableFullScan_58| 10000.00 | i| keep order: false, stats: pseudo||└─IndexLookUp_57(Probe) | 10.00 | root| ||| ├─IndexRangeScan_55(Build)| 10.00 | cop[tikv] | | range:["?","?"], keep order:false, stats:pseudo|| └─TableRowIDScan_56(Probe)| 10.00 | cop[tikv] | table:o| +--------------------------------------+---------- +-----------+------------------------------------- +------------------------------------------------- -----------------------+

The execution plan result is easier to see than MySQL's explain result. In particular, the id column part has a tree structure, and the distributed execution steps are obvious. By the way, there are two values in the task column. root is a task that runs on tidb-server and cop[tikv] runs on tikv-server Task.

What does this result mean?

table:o)HashJoin_40 to convert the above two intermediate results to orders.id Join with the condition of =order_items.order_idThe problem is TableFullScan_58 and Selection_59 of the child table. A faster and more efficient way is to aggregate the parent table data first and look for data in the child table by order_id instead of a full scan.

It didn't work even if HashJoin was specified as IndexJoin with SQL Hint, but it was solved with the support of PingCAP. The idea of the solution is that if there is no child table condition i.state = 'active', the parent-child table join will be IndexJoin, so the child table filter operation will be cop[tikv] to root (i.e. tidb-server). Condition i.state = 'active' in child table Condition using both parent/child table columns ( i.state = 'active' or o.user_id = '' )< /code> resulting in the following execution plan:

explain SELECT i.id, i.order_id, i.price FROM orders AS o, order_items AS iWHERE o.id = i.order_idand ( i.state = 'active' or o.user_id = '' )and o. user_id = '?' ORDER BY i.id DESC;+------------------------------------- ---+---------+-----------+------------------------ -------------+----------------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- -+| id | estRows | task| access object| operator info |+---------------------------------- ------+---------+-----------+--------------------- ----------------+--------------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- ----+| Sort_8| 12.50| root| | test.order_items.id:desc|| └─Projection_10| 12.50| root| | └─IndexJoin_15| 12.50| root| ), other cond:or(eq(test.order_items.state, "active"), eq(test.orders.user_id, "")) ||├─IndexLookUp_54(Build)| 10.00| root| |||│ ├ ─IndexRangeScan_52(Build) | 10.00| cop[tikv] | table:o, index:idx_user_id(user_id) | range:["?","?"], keep order:false, stats:pseudo||| (Probe) | 10.00| cop[tikv] | table:o| keep order:false, stats:pseudo||└─IndexLookUp_14(Probe)| 1.25 | root| ||| tikv] | table:i, index:order_id(order_id)| range: decided by [eq(test.order_items.order_id, test.orders.id)], keep order:false, stats:pseudo || ) | 1.25 | cop[tikv] | table:i| keep order: false, stats: pseudo|+--------------------------- -------------+---------+-----------+-------------- -----------------------+-------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- -------------------------------------------------- -----------+

This solved the problem perfectly.

MySQL protocol & MySQL client

TiDB seems to be compatible with MySQL 5.7+, and there is no particular problem with either MySQL client or PHP + MySQL library in our existing test environment. Source code, middleware, etc. could be changed without any changes.

What I wanted to do

We were able to successfully achieve the goal of the PoC by deciding on the verification details and period. I wanted to verify various other interesting aspects of TiDB, but I omitted them. I would like to verify the following points when verifying more next time.

For this TiDB PoC, we selected the DB technology for the DB requirements of our division, and decided on the direction of the NewSQL product. Among the NewSQL products, we chose TiDB, which is highly compatible with existing programs. Then, we narrowed down the verification target and performed a performance test of the TiDB Cluster construction and purchase function on AWS EKS. Primarily tested for write scalability, the purchased TPS of the existing system was 50, and TiDB Cluster achieved a 5x target. In addition, we were able to quickly verify scale-out and scale-in online.

Lastly, I would like to thank the people of PingCAP and AWS. Thank you for your support.

DMM is making various technical improvements. In a workplace where you can verify the latest DB technology, I think it is easy to propose verification and selection of other technologies. If you are interested, please apply for the positions below.

In addition to PHP engineers, we are also looking for Go engineers for new projects.

![[July 6 and 7] DX realized by content cloud, advanced platform for business transformation](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/6bbafe438d78271513761788166cbf94_0.jpeg)